We continue our previous blog post about PCI DSS compliance on GCP with more PCI requirements examples:

PCI Requirement 5: Protect all systems against malware and regularly update anti-virus software or programs

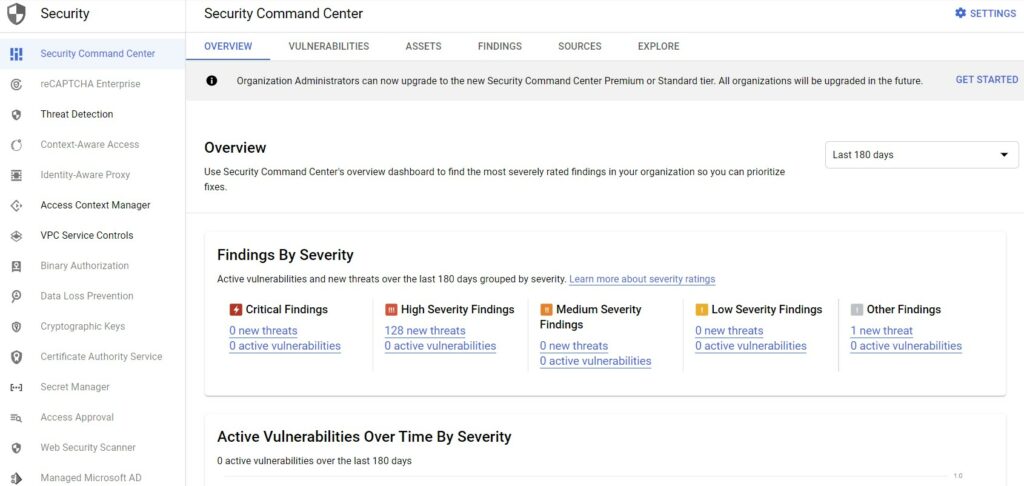

Cloud Security Command Center helps security teams gather data, identify threats, and respond to threats before they result in business damage or loss. It offers deep insight into application and data risk so that you can quickly mitigate threats to your cloud resources and evaluate overall health. With Cloud Security Command Center, you can view and monitor an inventory of your cloud assets, scan storage systems for sensitive data, detect common web vulnerabilities, and review access rights to your critical resources, all from a single, centralized dashboard. It can help you comply with several requirements, including sections 5 and 6.6.

GCP Cloud Security Command Center

GCP Cloud Security Command Center

PCI Requirement 6: Develop and maintain secure systems and applications

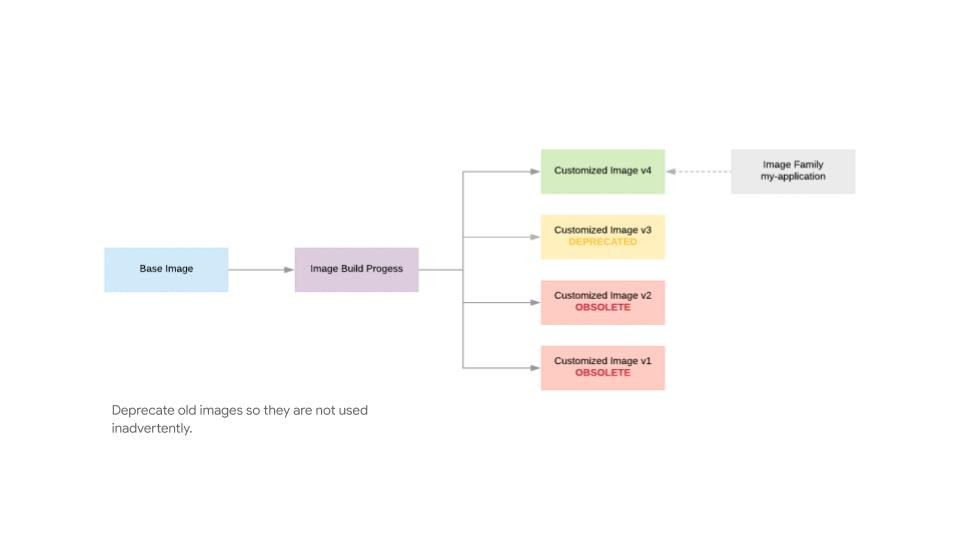

PCI Requirement 6.2 talks about ensuring all systems are patched with the latest security patches within one month of release. Having a robust image pipeline can help with this, but creating the new images with the patches and depreating images that aren’t patched, so that old and obsolete images aren’t used inadvertently.

- Use image families to make sure your automation and users use the latest image

- Use state flags to mark images as DEPRECATED, OBSOLETE, DELETED

GCP PCI Image life cycle

GCP PCI Image life cycle

PCI Requirement 7: Restrict access to cardholder data by business need to know

PCI Requirements:

7.1 Limit access to system components and cardholder data to only those individuals whose job requires such access.

7.1.1 Define access needs for each role, including:

• System components and data resources that each role needs to access for their job function

• Level of privilege required (for example, user, administrator, etc.) for accessing resources.

7.1.2 Restrict access to privileged user IDs to least privileges necessary to perform job responsibilities.

7.1.3 Assign access based on individual personnel’s job classification and function.

7.2 Establish an access control system(s) for systems components that restricts access based on a user’s need to know, and is set to “deny all” unless specifically allowed.

This access control system(s) must include the following:

7.2.1 Coverage of all system components.

7.2.2 Assignment of privileges to individuals based on job classification and function.

7.2.3 Default “deny all” setting.

Once access needs for each job function are defined, custom roles can be created provide granular control over the exact permissions to access system components and data resources

- Create groups based on job functions, and assign custom roles to those groups

- Job function groups can be nested in job classification groups

- Custom roles can be defined at the organizational level

Review available permissions and their purpose through the

API Explorer (search for product)

Combine predefined roles

Combine permissions

PCI Requirement 7: continuing…

Understanding IAM core principles is key to implementing separation of duties and least privilege for PCI compliance as defined in requirement 7

- User are typically humans – think AD/LDAP users

- Service accounts are typically “robot accounts” assigned to a service with only the permissions that service/robot needs to do its job

- Groups are a collection of users

- IAM roles are a set of permissions

Read from top to bottom

- Users can be part of groups

- Service accounts can be part of groups

- Users and groups can be granted rights to service accounts via IAM roles

- Through the IAM roles, they can be granted access to resources

- Service Accounts are also resources – grant service account user role to allow user to run operations as the service account

PCI Requirement 8: Identify and authenticate access to system components

Requirement 8 covers identity and authentication management, and GCP can help customers implement this in both GCP, and in the customer’s own applications.

- Cloud Identity provides identity and authentication to GCP.

- Customers can leverage Cloud Identity-Aware Proxy (Cloud IAP) and other Google tools to implement identity and authentication on their applications.

- Google websites and properties use robust public key technologies to encrypt data in transit: 2048-bit RSA or P-256 ECDSA SSL certificates issued by a trusted authority (currently the Google Internet Authority G2).

PCI Requirements:

8.1 Define and implement policies and procedures to ensure proper user identification management for non consumer users and administrators on all system components as follows:

8.1.1 Assign all users a unique ID before allowing them to access system components or cardholder data.

8.1.2 Control addition, deletion, and modification of user IDs, credentials, and other identifier objects.

8.1.3 Immediately revoke access for any terminated users.

8.1.4 Remove/disable inactive user accounts within 90 days.

8.1.5 Manage IDs used by third parties to access, support, or maintain system components via remote access as follows:

• Enabled only during the time period needed and disabled when not in use.

• Monitored when in use.

8.2 In addition to assigning a unique ID, ensure proper user-authentication management for non-consumer users and administrators on all system components by employing at least one of the following methods to authenticate all users:

• Something you know, such as a password or passphrase

• Something you have, such as a token device or smart card

• Something you are, such as a biometric.

8.2.1 Using strong cryptography, render all authentication credentials (such as passwords/phrases) unreadable during transmission and storage on all system components.

8.2.3 Passwords/passphrases must meet the following:

• Require a minimum length of at least seven characters.

• Contain both numeric and alphabetic characters.

Alternatively, the passwords/ passphrases must have complexity and strength at least equivalent to the parameters specified above.

8.2.6 Set passwords/passphrases for first-time use and upon reset to a unique value for each user, and change immediately after the first use.

8.3 Secure all individual non-console administrative access and all remote access to the CDE using multi-factor authentication.

Note: Multi-factor authentication requires that a minimum of two of the three authentication methods (see Requirement 8.2 for descriptions of authentication methods) be used for authentication. Using one factor twice (for example, using two separate passwords) is not considered multi-factor authentication.

8.3.1 Incorporate multi-factor authentication for all non-console access into the CDE for personnel with administrative access.

8.3.2 Incorporate multi-factor authentication for all remote network access (both user and administrator, and including third-party access for support or maintenance) originating from outside the entity’s network.

PCI Requirement 9: Track and monitor all access to network resources and cardholder data

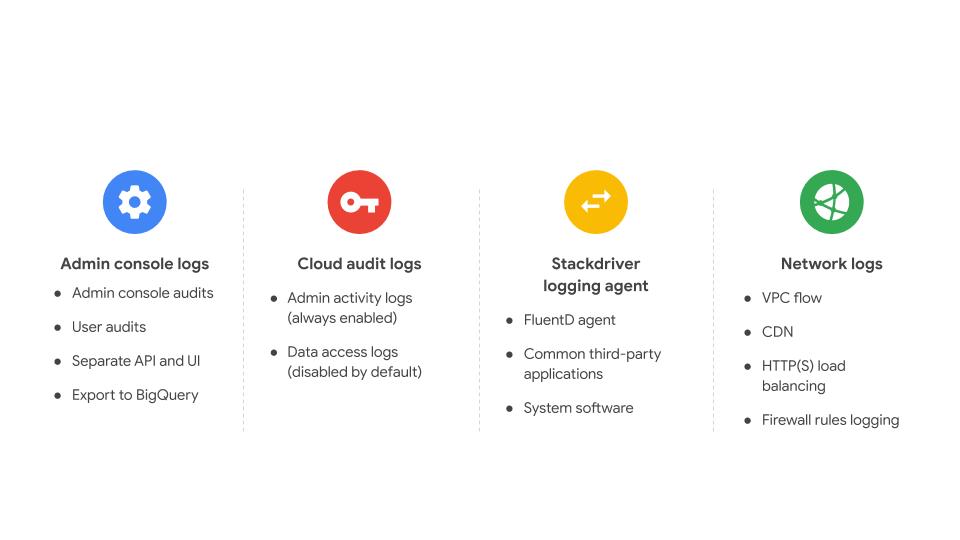

PCI Requirement 9 talks about tracking and monitoring all access to network resources and cardholder data. There are multiple logging options available in GCP to help you achieve this.

Stackdriver Logging collects data from many sources including Google Cloud Platform, VM instances running Stackdriver Logging agent (FluentD agent), and user applications.

Effective log entries contain answers the following questions:

- Who or what acted?

- Where did they do it?

- When did they do it?

Audit logs available for most GCP resources and services

Audit logs generate two types of logs:

- Admin Activity

- Data Access

GCP PCI Log Collection

GCP PCI Log Collection

Contact our GCP Security experts for a FREE GCP Security consultation, today!